03-09-24

Image source: https://ubuntu.com/engage/mlops-guide

W

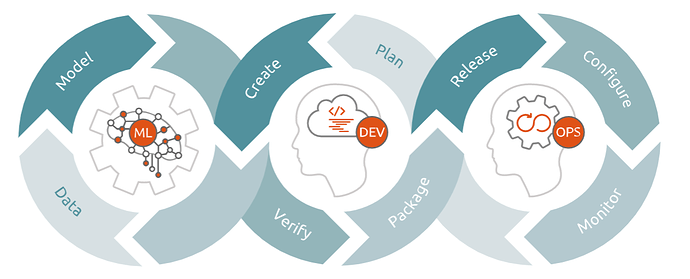

hen one thinks about MLOps they probably think about just the model. However, the model is just one of three "acts" that MLOps is associated with. The three acts are summarized below. But first, why three acts? One thing I say often is that building a machine learning model is a holistic activity. You cannot build a model without data. You cannot model without the right deep learning architecture or algorithm. You cannot model by blowing your budget on compute resources. And finally, you cannot model by deploying a model into the "wild" and hoping it is working well. It won't for long. Data, model building/selection, deployment/XAI are the three acts discussed below.Act I: Data.

Data is the most important factor when modeling. When I started out with data science, I thought that models and algorithms could "coerce" data into yielding its secrets. Obviously this was wrong. Machine learning and AI is the ultimate manifestation of GIGO (garbage in garbage out). You can do a lot with great data. You can't do much with bad data, but plan to source better data and do the best you can with what you have. The first act is creating and maintaining data that can be modeled and used for analytics. This is more than just data engineering. This is data sourcing, engineering and feature selection. This is also reconciling ground truth labels with predictions to monitor model performance.

Act II: Modeling. Bad data scientists or machine learning engineers pick an algorithm or deep learning architecture and run with it. The worst of the bad use models/architectures that are misaligned with their data type. I have seen "data scientists" use NLP GenAI models like LLama or ChatGPT to analyze image or binary data. This is an abuse. A better modeller selects an architecture/algorithm and tries various hyperparameters. The best modellers try multiple models and multiple hyperparameters. Modeling well takes time. This act is about more than just building a model. Another important component is physical compute needed for training neural networks. In an ideal situation, we only use compute when it is needed and then release the resources when training is done. Finally, modeling needs to be transparent and reproducible and model binaries should be saved and versioned.

Act III: CI/CD and CE When we talk about MLOps this is the Ops part. Notice how many of the activities in the first two acts rely on continuous development. We need to constantly improve and experiment with building new models CE (Continuous Experimentation). Once a model is deployed, we must monitor their performance (XAI). If models stop performing or when we have a new champion model, we can utilize Ops tools to speed deployment. If we can get humans in the loop or otherwise look at new observations we can reconcile predictions and new observations to additionally monitor performance. We need to update data sources as new observations come in. Finally, before we deploy or build a new model we need to make sure our data passes tests, our software passes tests and so on.

T

he above points are a bit scary, but they don't have to be. There are a variety of technologies that can help with MLOPs. The big takeaway from this post is not to overwhelm. The point of all this is this activity has to happen for every model. MLOps is not a nicety. It is a necessity and a competitive advantage. When your Ops program is on-point, you are winning.Hopefully this post is helpful and communicates the importance of MLOps.